Using Metal Performance Shaders for RealTime Fast Style Transfer.

I wanna describe how we can use result of Deep Learning in iOS applications for apply creative effect to camera stream in realtime.

Style Transfer

Style Transfer — algorithm for separating image content and style.

Then combine them again with components of other images.

Fast Style Transfer

Main disadvantage in Style Transfer algorithm: it’s enough slow and requires much computations.

There is Fast Style Transfer solution, with 2 main approaches:

- We have to generate dataset using Style Transfer algorithm from photos and train neural network

- We have to using sequence of Residual blocks for approximate style.

Residual block gives ability create more complex aproximation. Originally it’s used in ResNet.

These layers give ability to skip precision lost problem for deep neural networks.

I’m using anime-style image to train our’s data set:

Python Code for Style Transfer :

Most code for Fast Style Transfer I got from PyTorch examples:

In my script I’m using tuned for iOS device model.

It’s compromise between performance and image quality.

Neural Network Model:

There is implementation in Google Colab Notebook.

You can run it and generate weights:

iOS and iPhones

Currently iPhones are enough powerful devices, they contains GPU and API for heavy computations on it.

Metal Performance Shaders

Neural Networks can approximate any function into matrix multiplications.

We can use GPU for accelerate these massive calculations. Direct access to GPU can provide Metal.

Metal — API for direct access to GPU. We can use it for advanced hi-performace graphics, 3D, heavy computations.

Metal Performance Shaders it’s collection of different shaders implementations which we can use in our’s applications.

The library also contains Neural Networks implementations.

Why not CoreML?

CoreML is universal framework, we should support it’s API, provide appropriate data format and collect data in appropriate format.

These steps requires enough much CPU time for post and preprocessing.

Also I got much problems with generation from PyTorch model to CoreML model. Some layers was absent.

Setup Metal stack for Rendering

iPhone camera gives ability get raw data in YUV instead RGB.

This color format used in Video, TV, video streaming because it’s require less data then RGB. We also using this approach load into Metal Texture YUV image data and then convert it to RGB.

Shader for converting from YUV to RGB:

There is few main problems:

- Need ability load PyTorch weights into Convolution and InstanceNorm layers

- Need implement Concatenate operation for ability implement Residual layer

- Need implement Multiply for ability run multiply operation on constant value on final step.

Load PyTorch Weights:

There is no out-of box solution for load trained network. We need set up it directly via code and implement loading raw weights into layers.

Convolution:

I wrote additional story how loading weights from convolution layers in Pytorch model:

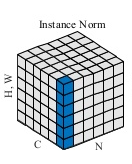

IntstanceNorm:

There isn’t any problem in loading data into InstanceNorm layers, you can load beta and gamma data directly without any manipulations.

Basic layers which I’m used to build model:

- Convolution Layer — main Neural Network layer for image processing and computer vision.

- Instance Normalization — layer for increase training speed, scale data between layers and doing regularisation, was specially created for Fast Styling. (The Missing Ingredient for Fast Stylization)

- RELU — activation function for increase training speed and filtering train direction in appropriate way.

- Upscale — Upscale image, but in combination with Convolution Layer works better then “Transposed convolution Layer”

Custom Layers:

- Concatenate(+) operation Metal Performance Kernel

- Multiply(×) operation Metal Performance Kernel

Setup Neural Network :

Apply layers for getting effect:

Implementation of Neural Network details you can see in StyleTransferFilter.swift in project sources

Link to GitHub project:

https://github.com/dhrebeniuk/RealTimeFastStyleTransfer

Results of work: At attached video you can see video in realtime