Convert MLMultiArray to Image for PyTorch models without performance lags

One main problem in iOS projects which used generative models(for example images) it’s MLMultiArray output which contain image.

You can write extension for MLMultiArray, something like this:

But such approach requires most CPU processing time, so not applicable for realtime.

Better solution, it’s ability get Image directly from CoreML model. It’s possible for some CreateML Models(like Style Transfer). So it’s also possible for custom models.

At this time if you try add to coremltools converter custom output for pytotch model, then you will get error:

Batch or sequence image output is unsupported for image output ...But we can manually adjust specification for coremltools models.

Main idea I got from this answer:

Let’s define model for PyTorch and covert it via coremltools:

Then need covert model output from multiarray to image:

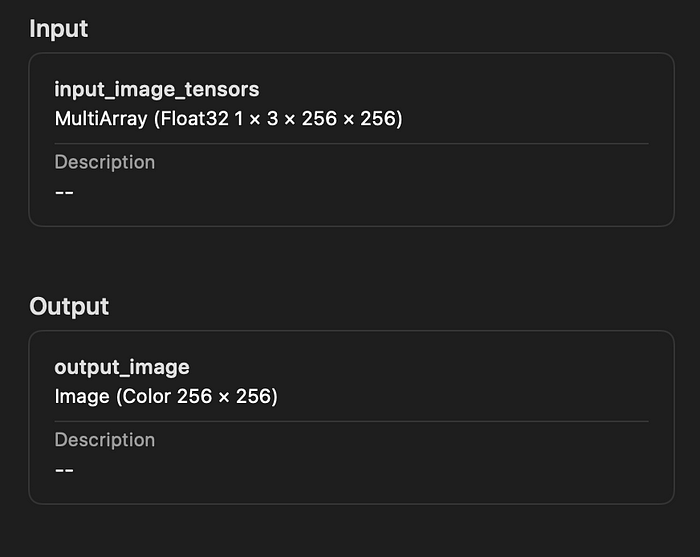

You can see generated model in Xcode:

Later in Xcode project you can get CIImage from CVPixelBuffer

iOS give ability render CIImage directly via CIContext or convert it to UIImage.